Abstract

Controlling a brain-computer interface (BCI) is a difficult task that requires extensive training. Particularly in the case of motor imagery BCIs, users may need several training sessions before they learn how to generate desired brain activity and reach an acceptable performance. A typical training protocol for such BCIs includes execution of a motor imagery task by the user, followed by presentation of an extending bar or a moving object on a computer screen. In this chapter, we discuss the importance of a visual feedback that resembles human actions, the effect of human factors such as confidence and motivation, and the role of embodiment in the learning process of a motor imagery task. Our results from a series of experiments in which users BCI-operated a humanlike android robot confirm that realistic visual feedback can induce a sense of embodiment, which promotes a significant learning of the motor imagery task in a short amount of time. We review the impact of humanlike visual feedback in optimized modulation of brain activity by the BCI users.

Keywords

- motor imagery

- BCI training

- visual feedback

- android robot

- positive bias

- embodiment

- performance

- neurorehabilitation

1. Introduction

Brain-computer interfaces (BCIs) have been considered for years as a new method of control and communication with the outside world not only for disabled patients who have lost motor control [1, 2] or speech abilities [3], but also for healthy users who are seeking new ways of interaction with virtual reality (VR) environments [4] and gaming applications [5]. However, despite their popularity and potentials, BCIs still remain mostly used inside laboratories and barely commercialized for real-world applications. The main reason behind this slow progress is the lack of reliability and poor performance of the BCI systems [6]. Even the finest BCI classifiers developed to date are not yet able to extract the relevant features from brain activity with high accuracy and robustness, particularly if the activity is recorded with electroencephalography (EEG) and contains noise. Many BCI researchers have made it a quest of their life to develop systems and algorithms that can decode EEG activity with high accuracy [7]. However, beside the algorithms, there is another element in the BCI loop that often gets neglected and that is the human user who is the source of the input signals [6, 8]. Although it has been previously shown that not every user is capable of controlling a BCI, the so-called BCI illiteracy [9], most users can obtain a decent level of “skill” with a few sessions of training.

Motor imagery-based BCIs demand particularly longer training time compared to ERP-based BCIs (such as P300 speller) or BCIs that use steady-state visual-evoked potentials (SSVEPs). This is due to the fact that motor imagery task, the mental rehearsal of a movement without actually performing it, is a counterintuitive task for the majority of individuals. Most users cannot visualize a vivid picture of the movement and its kinesthetic experience. Hwang et al. refer to this as the unknown “feel of motor imagery” [10]. An imaginary action can range from the visualization of a self-performed movement from a first-person perspective, to a third-person view of the self-body movement, to the manipulation of an external object that is either physical or imaginary [11]. Although these types of motor imagery all involve voluntary actions, they may not involve similar cognitive processes. For novice BCI users, the instruction about a motor imagery task is normally given verbally by an experimenter, and it is up to the user to find the optimum image, by trial and error, that leads to a high performance.

On the other hand, similar to any other interface, BCI users should receive feedback of their performance in order to close the control loop between them and the interface. Over years, various feedback paradigms for motor imagery training have been proposed, most of which are based on visual and auditory feedback [12, 13]. One of the main issues in the design of visual feedback in most of motor imagery-based BCIs is that the feedback presentation is not congruent with the subject’s image of a bodily movement. For example, in the training paradigm introduced by Pfurtscheller and Neuper, subjects imagined either a right- or a left-hand movement and watched a horizontal feedback bar on a computer screen that was extended to the right or to the left based on the classifier output [12]. Blankertz et al. presented a falling ball on the screen that could be horizontally displaced either to the left or right side if the user’s left- or right-hand imagery was successfully detected by the classifier [13]. In another study, Nijboer et al. employed two feedback designs: a visual feedback with a cursor on a screen that moved up and down based on the subject’s sensorimotor rhythm and an auditory feedback that presented different types of sound in existence or the absence of motor imagery activation [14]. In all of the given examples, the feedback design that was employed had no congruity with the type of image that the subjects held (image of a bodily hand or a foot). Not only the disparity between the visual feedback and the type of image can confuse the subjects during the task, but it also prevents them from obtaining “the feel of motor imagery” and correcting their imagery strategy.

To overcome such a deficiency, some studies have employed a double-modality design. For instance, Chatterjee et al. introduced a vibrotactile feedback paradigm that delivered haptic information during BCI control [15]. Every time subjects imagined a hand movement, the classifier result was presented to them in the form of a cursor movement (visual feedback) and a vibration on their corresponding arm (tactile feedback). A design like this can enormously change the interaction a clinical BCI user has with a neuroprosthesis and may facilitate the decoding of sensorimotor rhythm during neurorehabilitation therapy with BCIs [16]; however, in the case of a healthy user, the application of vibration on a part of body that is not involved in the imagination of movement (arm instead of the hand) can again disturb the conduct of the motor imagery task by the user.

Another commonly faced problem in the BCI training protocols is the lack of motivation for novice users. Motor imagery BCI takes a very long training that is often accompanied with unsuccessful and unsatisfying results in the beginning. It has been shown that motivation [17, 18] along with other mental states such as fatigue and frustration [19] can substantially influence BCI performance. To alleviate this problem, some of the previous studies have given their attention to the design of a more interactive feedback environment by means of virtual reality techniques [18, 20]. A few others have tried to improve users’ level of confidence and perception of control over the BCI system by intentionally biasing the presented feedback accuracy [21, 22].

What is important, and often neglected in the BCI research, is that the interaction between a user and the interface is the most critical component in the BCI loop, and therefore an inappropriate training design can hinder the user’s learning of the task and BCI skills. In this chapter, we address the importance of training and feedback design in the production and control of the EEG components that are required for a motor imagery-based BCI. We first review research on the compatibility of the feedback appearance with a real human body and its impact on learning of the motor imagery task. We then discuss works that have tried to improve the motivation level of a user either by making the environment playful or by positively faking the performance of the user. In the following, we investigate the role of embodiment, the feeling of owning a controlled body, which has long been disregarded in the BCI research. In the final part of this chapter, we introduce our android-based training paradigm that has exhibited a promising potential for improving motor imagery learning in novice BCI users.

2. Motor imagery and action observation

It has been shown that mental imagery of a motor action can produce cortical activation similar to that of the same action executed [23, 24]. For instance, the execution of a hand movement results in the suppression of mu rhythm (8–12 Hz) in sensorimotor regions and so does the motor imagery of the corresponding hand [25]. By monitoring single-trial EEG signals and measuring event-related desynchronization (ERD), it is even possible to detect whether the imagined hand was the right or the left one [26]. However, previous studies suggest that the detection of hand imagery can only achieve a high rate when the user has employed a kinesthetic motor imagery strategy (first-person process) [27]. In the same study, Neuper et al. report that the observation of a left- or a right-hand movement could also lead to high classification accuracies at parieto-occipital regions [27]. Many neuroimaging studies have found empirical evidences that combining motor imagery with action observation could induce a stronger cortical activation compared to either condition alone [28]. This has been associated with the firing of mirror neurons [29] that become active during the observation of a motion and represent high-level information about goals and intentions [28]. It also indicates a shared neurocognitive process between motor imagery and action observation that could be utilized in BCI training and control. Particularly, if the action is congruent with the motor imagery, the observed image is a simulation of one’s own action, the combination of the two conditions can lead to a “sense of effort,” a sense of agency, and imagined kinesthetic sensations that would arise during one’s own motor execution [30].

Ono et al. examined the effect of visual feedback on ERD during a motor imagery task [31]. In a series of training sessions, they hired different groups of subjects and trained them on different types of visual feedback, including a conventional feedback bar, a human hand that was shown on a screen in front of the subject and a human hand that was shown on a screen as the extension of one’s own arm. They found that by the end of the training, the group that was presented anatomically congruent feedback produced the highest ERD value and classification accuracy. Neuper et al. have also investigated the impact of a visual feedback presentation on sensorimotor EEG rhythms and BCI performance [32]. They trained two groups of subjects on a motor imagery-based BCI using two feedback designs: a realistic feedback (a video of a moving hand that grasped a glass) and an abstract feedback (a moving bar that extended horizontally). Their results, however, showed no difference between the two feedback groups in terms of motor imagery learning and ERD changes. An explanation for this, as authors have indicated in their discussion, could be the short training period and few number of feedback sessions.

With recent advancement in videogames and VR technology, a more rich, realistic, and engaging visual presentation of the BCI output has become possible. Pineda et al. designed a three-dimensional first-person shooter game that enabled BCI users to make navigational movements by left and right motor imageries [33]. Their results indicated that subjects could learn to control levels of mu rhythm very quickly, within approximately 3–10 hours of training that was scheduled over a course of five weeks. Leeb et al. also reported a case study with a tetraplegic patient who was able to navigate through VR by imagination of his feet movements that was translated into movements of an avatar [34]. The most obvious benefits of VR in the construction of visual feedback are the richness of details that could be incorporated, the sense of embodiment it induces (see Section 4), and a relatively low cost. Particularly, in terms of detailed feedback, it can involve different types of movement and inclusion of goal-oriented tasks. Past studies have shown that motor cortex is sensitive to different forms of observed motor behavior [35] and subjective perspective [30, 36]. Muthukumaraswamy et al. have shown that the observation of an object-directed precision grip produces more mu suppression than the observation of a non-object-directed grip [35]. In our previous study, we compared motor imagery learning between two groups of BCI users who operated either a pair of robotic gripper or a pair of humanlike robotic hands [8]. We found a more robust learning of the BCI task for the second group who were trained with a pair of humanlike robotic hands. This result provides evidence that visual feedback with a more detailed appearance and compatible action to one’s real hand can extend larger effect on neural plasticity and reinforcement of motor imagery learning.

It is worth noting that BCI training along with visual feedback of a body movement (action observation) has been employed in neurorehabilitation studies and with stroke patients as well [37, 38, 39, 40, 41, 42]. It is suggested that providing anthropomorphic feedback during motor imagery works in a similar way as does mirror therapy for phantom limb patients [39]. That is, providing feedback of a bodily movement can activate neural networks associated with action observation system and induce a “motor resonance” [40]. Thereby by directly matching the observed or imagined action onto the internal simulation of that action, motor resonance can further facilitate the relearning of the impaired motor functions [41]. For instance, Foldes et al. trained spinal cord injury patients with hand paralysis on a motor imagery-based BCI combined with a virtual hand feedback. Results showed that all patients could successfully modulate their brain activity in order to grasp the virtual hand and two of three participants could improve their sensorimotor rhythms in only one session of feedback training [39]. Kim et al. also combined an action observation training with a motor imagery-based BCI and found promising results in terms of actual functional improvements in the upper arm movement of stroke patients [42].

The above review shows that a neurofeedback paradigm that merges motor imagery with the observation of a bodily action has the potential to promote plastic changes in somatosensory activation, the recovery of motor functions, and the improvement of motor performance [43]. In a very similar way, such combination can bring significant benefits to BCI training, by helping the user to activate motor-related cortical areas and generate brain signals that are easily detectable by the BCI classifier.

3. Human factors and BCI learning

To control a BCI, the user has to perform a mental imagery task and generate distinguishable brain activity for signal-processing algorithms. Modulation of one’s own brain signals is not an intuitive task, and therefore the user needs to practice and learn the BCI “skill.” However, an efficient learning of a skill requires optimized training protocols that consider the user’s psychological states (such as motivation, attention, confidence, and satisfaction) in order to ensure more effort and better performance from the user’s side [44]. Kleih et al. have shown that in the control of a P300 BCI, the level of P300 amplitude was significantly correlated with the level of self-rated motivation, that is, highly motivated subjects were able to communicate through BCI faster than less motivated subjects [45]. In another BCI study with ALS patients, Nijboer et al. reported that motivational factors, specifically challenge and confidence, were positively correlated with BCI performance, whereas fear had a negative influence [46]. It is suggested that even with highly motivated subjects, users can experience a low level of satisfaction if they do not succeed in accomplishing the BCI-control task [47].

In order to overcome such issues, many researchers have explored alternative BCI training protocols. Leeb et al. suggested employment of VR environments in designing attractive BCI training paradigms that increase user’s engagement [18]. Their results show that users are likely to perform better in a VR navigation task compared to the conventional training with cue-based feedback. Lotte et al. proposed improvement of engagement and motivation in a social context by the application of a BCI game between two users [44]. Users could either participate in a collaborative game, where the sum of the BCI outputs from both users was used to direct a ball on a screen, or in a competitive version, where the users had to push the ball toward the opposite direction. They observed that multiplayer version of the games could effectively improve BCI performance compared to its single player version.

Multimodality and closing the sensorimotor loop has also been suggested as another method to increase user’s engagement and performance. Jeunet et al. compared users’ performances in a motor imagery-based multi-task BCI with different feedback modalities (visual vs. tactile) and found a significant improvement when subjects received continuous tactile feedback compared to an equivalent visual feedback [48]. This is consistent with the study in [16] where haptic feedback, provided in a synchronized manner with the subject’s execution of a motor imagery task, could facilitate decoding of movement intentions and increase classification accuracy for both healthy and stroke patients.

In addition to the above strategies, some studies have proposed manipulation of the feedback either by biasing the feedback accuracy (i.e., giving the user a perception that he/she did better/worse than what he/she actually did) or by error-ignoring (i.e., presenting feedback only when the user performed the task correctly) [21, 22, 49, 50]. Barbero et al. investigated the influence of a biased feedback on BCI performance when subjects navigated a falling ball on a screen by right- and left-hand imageries. They found that subjects with a poor performance benefitted from positive biasing of their performance level, whereas for those already capable of the BCI task, the bias of feedback impeded the results [21]. This is while Gonzalez-Franco et al. found larger learning effects for negative feedback than for positive feedback [49]. In our previous studies with BCI operation of a pair of humanlike robotic hands, we found a general improving effect, both when subjects received a positively biased feedback of their BCI performance and when their mistakes were not presented to them, that is, error ignoring [22]. This improvement could have been associated with the higher sense of embodiment that users experienced during operation of the hands (see Section 4).

Overall, previous research demonstrates that human psychological factors play a significant role in the process of BCI training. It is even suggested that parameters such as personality, motivation, and attention span could predict performance in a single session of motor imagery-based BCI control [51]. Future training environments should take these parameters into account in order to enhance learning of the BCI task as well as to address the problem of “BCI inefficiency” that concerns users who are unable to learn BCI control.

4. The role of embodiment

Recent view of cognitive development suggests that our cognitive skills are dynamically shaped through our bodily interaction with the environment and thus are grounded in sensory and motor experiences [52, 53]. Under this view, the mind (mental images, thoughts, representation) is created from processes that are closely related to brain representations of the body and the way it interacts with the real world [54]. This fosters the notion of neural plasticity during the learning of new motor skills and tool use that might lead to temporary or long-term incorporation of new objects and augmented cognition [55]. When extended to external body parts (dummy limbs), the experience of embodiment is often described by the two senses of body ownership (to what extent the seen body part was perceived as one’s own body) and agency (to what extent the motions of the seen body were attributed to one’s own movements) [56]. Although there are some counter arguments [57], embodiment is generally conceived as an important component in establishing interaction between a patient and medical BCIs (such as neural prostheses) for better incorporation of the artificial limb [58]. However, with the recent advancements in VR and robotic technology, the concept of embodiment has also been proposed as a reinforcing factor for immersive experience of healthy users.

The first question, however, is whether BCI control of a non-body object would evoke a sense of embodiment for the operator. Here, we mainly focus on the sense of embodiment that is induced over a humanlike body shape rather than embodiment in physical space and for general objects as it is reported in [59]. Perez-Marcos et al. combined virtual reality and a motor imagery-based BCI in order to induce a sense of ownership for a virtual hand [60]. Although they did not assess motor-related features of the collected EEG signals in this study, they showed that BCI control of a virtual hand could induce an illusion of body ownership and trigger an electromyogram (EMG) response when the virtual hand suddenly fell down. Using a real-time fMRI setup, Cohen et al. also proposed a robotic embodiment for a humanoid robot in France that was remotely controlled by subjects performing motor imagery in Israel [61]. While they did not perform a systematic evaluation of the sense of embodiment and the number of subjects was limited, post-experiment interviews indicated a high level of tele-presence and embodiment for at least two of the four subjects who participated in this study.

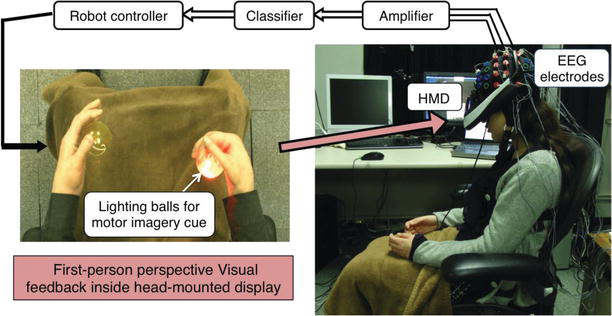

In a similar direction, the authors of this chapter have reported an illusion of body ownership for a pair of humanlike robotic hands that were controlled by a BCI system [62]. In this experiment, subjects watched robot’s hands from a first-person perspective in a head-mounted display and performed a right or a left motor imagery in order to grasp a lighted ball inside the robot’s hands (Figure 1). Our subjective (questionnaire) and physiological measurements (skin conductance response) revealed that the subjects experienced a feeling of owning the robot’s hands, and this feeling had a significant correlation with their BCI performance [22].

Figure 1.

Users controlled a pair of humanlike robotic hands by performing right- and left-hand imageries while watching first-person perspective images of the robot’s body.

In addition to the enhancement of the immersive experience, the feeling of embodiment has been shown to have a positive impact on neurofeedback training and motor imagery learning at the neural level. Braun et al. reported a sense of ownership for an anthropomorphic robotic hand that was placed in front of the subjects and was controlled by a right motor imagery task [63]. Interestingly, their results indicated a stronger ERD in alpha and beta frequency bands when the robotic hand was in a congruent position (higher embodiment) compared to an incongruent condition. Leeb et al. also compared the influence of feedback types on the motor imagery performance and BCI classification accuracy. They found that an immersive feedback (walking inside a VR environment) resulted in a better task performance by the subjects than a simple BCI feedback (bar presented on a computer screen), although this did not seem to affect the BCI classification accuracy [64].

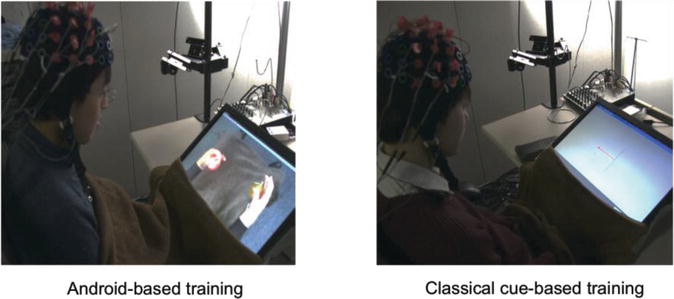

The results obtained from the above studies are all consistent with our previously reported findings in [8] where subjects practiced motor imagery task in a BCI-control session with two types of feedback (Figure 2A). As mentioned earlier in this chapter, subjects who were trained with a more humanlike android robot could perform better on the motor imagery task in the final BCI-control session than those who were trained with a pair of metallic gripper (Figure 2B). In this study, “motor imagery performance” was defined as how well subjects could generate discriminant brain patterns for the two classes of right and left motor imageries and it was obtained by the Fisher’s discriminant criterion in a linear discriminant analysis that observed the distribution of EEG features [8]. The ΔMotor imagery performance in Figure 2B represents the ratio of this criterion between the two evaluations and training sessions (for more details, refer to [8]). In another study, we also reported that in comparison with a classical feedback bar, motor imagery training with a humanlike android feedback that induces a sense of embodiment could lead to a stronger mu suppression in the sensorimotor areas and eventually improved subjects’ online BCI performance [65].

Figure 2.

Effect of embodiment on motor imagery learning. (A) Two groups of subjects practiced motor imagery task while receiving visual feedback from a humanlike android robot and a pair of metallic gripper. (B) Subjects who were trained with the android robot demonstrated a significantly more robust learning of the motor imagery task compared to the group who were trained with the non-humanlike gripper.

Research suggests that cortical connections mediating motor activation are formed through experience [66], making perception-action coupling an important functional factor in the learning of new motor skills [67]. Under this view, a procedural memory of motor programs together with their sensory concomitants is stored during motor learning which gives rise to anticipatory mechanisms that predict sensorimotor outcomes of planned actions in real time [11]. The usage of a humanlike android in our studies could have influenced motor imagery learning twofold. First, it is speculated that the visual feedback provided from the android’s body resembled a self-body action—as we experience it in our daily activities—and therefore matched with the visual anticipations of the motor intentions. Second, a more detailed and compatible visual feedback from the android’s body (in terms of appearance and motion) could have excited motor memories more intensely, and therefore subjects trained with a humanlike android recalled more vivid and explicit images of the movement during the imagery task [8].

Not only that embodiment can reinforce learning of the motor imagery and BCI task, it has also been shown that the two share spectral and anatomical mechanisms [68]. In the study of [68], subjects watched either a pair of virtual arms or a pair of non-body objects projecting out from their body inside a head-mounted display. For both visual feedbacks, they first received a visuotactile stimulation to experience a body ownership illusion similar to rubber hand illusion (RHI) [69], and then they were instructed to perform a motor imagery for either their right or left hand. Their overall results demonstrated that both illusory hand ownership and motor imagery were associated with a mu-band modulation, and more importantly, there was an overlap between the areas that were activated during illusory hand ownership and motor imagery conditions. This finding suggests that multisensory mechanisms related to the sense of body ownership and embodiment share neural processes with motor imagery and thus could be used in the activation and classification of EEG patterns in BCIs. Indeed, the two processes have been shown to go hand in hand as in [70], we demonstrated that the BCI control of a pair of humanlike robotic hands by means of motor imagery induces a higher sense of body ownership and agency compared to a direct control by means of motor execution. It could be speculated that because of the shared mechanism between embodiment and motor imagery, there is a positive loop effect: motor imagery of the hands induces a strong sense of embodiment and embodiment activates more motor-related neurons detectable by the BCI classifier.

5. Our proposed model

Based on our review in this chapter, we summarize three elements that should be considered in the design of a BCI training protocol:

Feedback should be realistic and compatible with the task content. Particularly, in a motor imagery-based BCI, users would benefit from observation of movements that are consistent with their mental images.

Human factors such as motivation, confidence, and fatigue can significantly affect user’s interaction with the BCI system and subsequently influence their performance in the BCI task. Employment of interactive environments such as VR and providing positively biased feedback are two techniques that can enhance motor imagery learning particularly for novice BCI users.

The sense of embodiment and body ownership establishes a positive interaction with subjects’ motor imagery performance, and therefore, it is important to provide a realistic visual feedback that resembles a human body in terms of appearance, movement, and perspective.

By integrating the knowledge we obtained in our previous experiments [8, 22, 62] and the abovementioned points, we proposed an android-based training protocol in [65]. In this study, two groups of novice participants practiced hand grasp imagery either by a classical cue-based feedback (arrow and feedback bar) or by watching first-person perspective images of a humanlike android robot that made hand grasps based on the subject’s EEG patterns (Figure 3). In addition, subjects’ performance was positively biased during the training phase in order to boost their confidence and motivation for the motor imagery task. More importantly, we added a pre-training phase for the android group, where subjects could practice motor imagery, followed by kinesthetic motor actions. Results from this study revealed that participants who were trained with an android-based BCI achieved a significantly higher mu suppression in the sensorimotor areas (C3/C4 scalp positions) as well as a significantly better online BCI performance in the final evaluation phase compared to the participants who were trained with a classical training paradigm. We believe that the improved modulation of the sensorimotor rhythms in the proposed training protocol is highly influenced by the sense of embodiment that participants perceived during BCI control of the robot’s hands.

Figure 3.

Subjects who were trained with a humanlike android robot and experienced a high sense of embodiment revealed a stronger mu suppression in the sensorimotor areas and showed better BCI performance compared to subjects who were trained with a classical feedback bar.

6. Conclusion

In this chapter, we highlighted the importance of a human user in the BCI loop and addressed some of the deficiencies in the training and feedback design of the classical motor imagery-based BCI systems. We provided empirical evidence that a careful training design that views BCI experience from the user’s perspective and considers such factors as task-feedback compatibility, motivation, and embodiment could reinforce users’ learning of the motor imagery task and consequently improve their BCI performance in a very short amount of time. We believe that our results are of importance to the BCI community and should be taken into account for future design of BCI systems that are employed in real-world applications outside of laboratories.

Acknowledgments

This research was supported by Grants-in-Aid for Scientific Research 25220004, 26540109, and 15F15046.

References

- 1.

Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002; 113 (6):767-791 - 2.

Neuper C, Müller GR, Kübler A, Birbaumer N, Pfurtscheller G. Clinical application of an EEG-based brain-computer interface: A case study in a patient with severe motor impairment. Clinical Neurophysiology. 2003; 114 (3):399-409 - 3.

Brumberg JS, Nieto-Castanon A, Kennedy PR, Guenther FH. Brain-computer interfaces for speech communication. Speech Communication. 2010; 52 (4):367-379 - 4.

Lécuyer A, Lotte F, Reilly RB, Leeb R, Hirose M, Slater M. Brain-computer interfaces, virtual reality, and videogames. Computer. 2008; 41 (10):66-72 - 5.

Nijholt A, Bos DPO, Reuderink B. Turning shortcomings into challenges: Brain-computer interfaces for games. Entertainment Computing. 2009; 1 (2):85-94 - 6.

Lotte F, Larrue F, Mühl C. Flaws in current human training protocols for spontaneous brain-computer interfaces: Lessons learned from instructional design. Frontiers in Human Neuroscience. 2013; 7 :568 - 7.

Lotte F, Congedo M, Lécuyer A, Lamarche F, Arnaldi B. A review of classification algorithms for EEG-based brain-computer interfaces. Journal of Neural Engineering. 2007; 4 (2):R1 - 8.

Alimardani M, Nishio S, Ishiguro H. The importance of visual feedback design in BCIs: From embodiment to motor imagery learning. PLoS One. 2016; 11 (9):e0161945 - 9.

Guger C, Edlinger G, Harkam W, Niedermayer I, Pfurtscheller G. How many people are able to operate an EEG-based brain-computer interface (BCI)? IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2003; 11 (2):145-147 - 10.

Hwang HJ, Kwon K, Im CH. Neurofeedback-based motor imagery training for brain-computer interface (BCI). Journal of Neuroscience Methods. 2009; 179 (1):150-156 - 11.

Annett J. Motor imagery: Perception or action? Neuropsychologia. 1995; 33 (11):1395-1417 - 12.

Pfurtscheller G, Neuper C. Motor imagery and direct brain-computer communication. Proceedings of the IEEE. 2001; 89 (7):1123-1134 - 13.

Blankertz B, Dornhege G, Krauledat M, Müller KR, Curio G. The non-invasive Berlin brain-computer interface: Fast acquisition of effective performance in untrained subjects. NeuroImage. 2007; 37 (2):539-550 - 14.

Nijboer F, Furdea A, Gunst I, Mellinger J, McFarland DJ, Birbaumer N, Kübler A. An auditory brain-computer interface (BCI). Journal of Neuroscience Methods. 2008; 167 (1):43-50 - 15.

Chatterjee A, Aggarwal V, Ramos A, Acharya S, Thakor NV. A brain-computer interface with vibrotactile biofeedback for haptic information. Journal of Neuroengineering and Rehabilitation. 2007; 4 (1):40 - 16.

Gomez-Rodriguez M, Peters J, Hill J, Schölkopf B, Gharabaghi A, Grosse-Wentrup M. Closing the sensorimotor loop: Haptic feedback facilitates decoding of motor imagery. Journal of Neural Engineering. 2011; 8 (3):036005 - 17.

Kleih SC, Riccio A, Mattia D, Kaiser V, Friedrich EVC, Scherer R, Kübler A. et al. Motivation influences performance in SMR-BCI. na. In: Proceeding of the 5th International Brain-Computer Interface Conference. 2011:108-111 - 18.

Leeb R, Lee F, Keinrath C, Scherer R, Bischof H, Pfurtscheller G. Brain-computer communication: Motivation, aim, and impact of exploring a virtual apartment. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2007; 15 (4):473-482 - 19.

Myrden A, Chau T. Effects of user mental state on EEG-BCI performance. Frontiers in Human Neuroscience. 2015; 9 :308 - 20.

Ron-Angevin R, Díaz-Estrella A. Brain-computer interface: Changes in performance using virtual reality techniques. Neuroscience Letters. 2009; 449 (2):123-127 - 21.

Barbero Á, Grosse-Wentrup M. Biased feedback in brain-computer interfaces. Journal of Neuroengineering and Rehabilitation. 2010; 7 (1):34 - 22.

Alimardani M, Nishio S, Ishiguro H. Effect of biased feedback on motor imagery learning in BCI-teleoperation system. Frontiers in Systems Neuroscience. 2014; 8 :52 - 23.

Jeannerod M, Frak V. Mental imaging of motor activity in humans. Current Opinion in Neurobiology. 1999; 9 (6):735-739 - 24.

Jeannerod M. The representing brain: Neural correlates of motor intention and imagery. Behavioral and Brain Sciences. 1994; 17 (2):187-202 - 25.

Pfurtscheller G, Neuper C. Motor imagery activates primary sensorimotor area in humans. Neuroscience Letters. 1997; 239 (2-3):65-68 - 26.

Pfurtscheller G, Brunner C, Schlögl A, Da Silva FL. Mu rhythm (de) synchronization and EEG single-trial classification of different motor imagery tasks. NeuroImage. 2006; 31 (1):153-159 - 27.

Neuper C, Scherer R, Reiner M, Pfurtscheller G. Imagery of motor actions: Differential effects of kinesthetic and visual-motor mode of imagery in single-trial EEG. Cognitive Brain Research. 2005; 25 (3):668-677 - 28.

Sale P, Franceschini M. Action observation and mirror neuron network: A tool for motor stroke rehabilitation. European Journal of Physical and Rehabilitation Medicine. 2012; 48 (2):313-318 - 29.

Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Cognitive Brain Research. 1996; 3 (2):131-141 - 30.

Vogt S, Di Rienzo F, Collet C, Collins A, Guillot A. Multiple roles of motor imagery during action observation. Frontiers in Human Neuroscience. 2013; 7 :807 - 31.

Ono T, Kimura A, Ushiba J. Daily training with realistic visual feedback improves reproducibility of event-related desynchronisation following hand motor imagery. Clinical Neurophysiology. 2013; 124 (9):1779-1786 - 32.

Neuper C, Scherer R, Wriessnegger S, Pfurtscheller G. Motor imagery and action observation: Modulation of sensorimotor brain rhythms during mental control of a brain-computer interface. Clinical Neurophysiology. 2009; 120 (2):239-247 - 33.

Pineda JA, Silverman DS, Vankov A, Hestenes J. Learning to control brain rhythms: Making a brain-computer interface possible. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2003; 11 (2):181-184 - 34.

Leeb R, Friedman D, Müller-Putz GR, Scherer R, Slater M, Pfurtscheller G. Self-paced (asynchronous) BCI control of a wheelchair in virtual environments: A case study with a tetraplegic. Computational Intelligence and Neuroscience. 2007; 2007 :7 - 35.

Muthukumaraswamy SD, Johnson BW, McNair NA. Mu rhythm modulation during observation of an object-directed grasp. Cognitive Brain Research. 2004; 19 (2):195-201 - 36.

Ruby P, Decety J. Effect of subjective perspective taking during simulation of action: A PET investigation of agency. Nature Neuroscience. 2001; 4 (5):546 - 37.

i Badia SB, Morgade AG, Samaha H, Verschure PFMJ. Using a hybrid brain computer interface and virtual reality system to monitor and promote cortical reorganization through motor activity and motor imagery training. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2013; 21 (2):174-181 - 38.

Machado S, Araújo F, Paes F, Velasques B, Cunha M, Budde H, Piedade R, et al. EEG-based brain-computer interfaces: An overview of basic concepts and clinical applications in neurorehabilitation. Reviews in the Neurosciences. 2010; 21 (6):451-468 - 39.

Foldes ST, Weber DJ, Collinger JL. MEG-based neurofeedback for hand rehabilitation. Journal of Neuroengineering and Rehabilitation. 2015; 12 (1):85 - 40.

Van Dokkum LEH, Ward T, Laffont I. Brain computer interfaces for neurorehabilitation—Its current status as a rehabilitation strategy post-stroke. Annals of Physical and Rehabilitation Medicine. 2015; 58 (1):3-8 - 41.

Holper L, Muehlemann T, Scholkmann F, Eng K, Kiper D, Wolf M. Testing the potential of a virtual reality neurorehabilitation system during performance of observation, imagery and imitation of motor actions recorded by wireless functional near-infrared spectroscopy (fNIRS). Journal of Neuroengineering and Rehabilitation. 2010; 7 (1):57 - 42.

Kim T, Kim S, Lee B. Effects of action observational training plus brain-computer interface-based functional electrical stimulation on paretic arm motor recovery in patient with stroke: A randomized controlled trial. Occupational Therapy International. 2016; 23 (1):39-47 - 43.

Wang W, Collinger JL, Perez MA, Tyler-Kabara EC, Cohen LG, Birbaumer N, Weber DJ, et al. Neural interface technology for rehabilitation: Exploiting and promoting neuroplasticity. Physical Medicine and Rehabilitation Clinics. 2010; 21 (1):157-178 - 44.

Lotte F, Jeunet C. Towards improved BCI based on human learning principles. In: 2015 3rd International Winter Conference on Brain-Computer Interface (BCI). Sabuk, South Korea: IEEE; January 2015. pp. 1-4 - 45.

Kleih SC, Nijboer F, Halder S, Kübler A. Motivation modulates the P300 amplitude during brain-computer interface use. Clinical Neurophysiology. 2010; 121 (7):1023-1031 - 46.

Nijboer F, Birbaumer N, Kubler A. The influence of psychological state and motivation on brain-computer interface performance in patients with amyotrophic lateral sclerosis – A longitudinal study. Frontiers in Neuroscience. 2010; 4 :55 - 47.

Spataro R, Chella A, Allison B, Giardina M, Sorbello R, Tramonte S, La Bella V, et al. Reaching and grasping a glass of water by locked-In ALS patients through a BCI-controlled humanoid robot. Frontiers in Human Neuroscience. 2017; 11 :68 - 48.

Jeunet C, Vi C, Spelmezan D, N’Kaoua B, Lotte F, Subramanian S. Continuous tactile feedback for motor-imagery based brain-computer interaction in a multitasking context. In: Human-Computer Interaction. Cham: Springer; September 2015. pp. 488-505 - 49.

Gonzalez-Franco M, Yuan P, Zhang D, Hong B, Gao S. Motor imagery based brain-computer interface: A study of the effect of positive and negative feedback. In: Engineering in Medicine and Biology Society, EMBC, 2011 Annual International Conference of the IEEE; Boston, MA, USA: IEEE; August 2011. pp. 6323-6326 - 50.

Yu T, Xiao J, Wang F, Zhang R, Gu Z, Cichocki A, Li Y. Enhanced motor imagery training using a hybrid BCI with feedback. IEEE Transactions on Biomedical Engineering. 2015; 62 (7):1706-1717 - 51.

Hammer EM, Halder S, Blankertz B, Sannelli C, Dickhaus T, Kleih S, Kübler A, et al. Psychological predictors of SMR-BCI performance. Biological Psychology. 2012; 89 (1):80-86 - 52.

Schöner G. Dynamical systems approaches to cognition. In: Cambridge Handbook of Computational Psychology (Cambridge Handbooks in Psychology). Cambridge: Cambridge University Press; 2008. pp. 101-126 - 53.

Smith LB. Movement matters: The contributions of Esther Thelen. Biological Theory. 2006; 1 (1):87-89 - 54.

Gibbs RW Jr. Embodiment and Cognitive Science. Cambridge: Cambridge University Press; 2005 - 55.

Clark A. Re-inventing ourselves: The plasticity of embodiment, sensing, and mind. Journal of Medicine and Philosophy. 2007; 32 (3):263-282 - 56.

Tsakiris M. My body in the brain: A neurocognitive model of body-ownership. Neuropsychologia. 2010; 48 (3):703-712 - 57.

Aas S, Wasserman D. Brain-computer interfaces and disability: Extending embodiment, reducing stigma? Journal of Medical Ethics. 2016; 42 (1):37-40 - 58.

Tyler DJ. Neural interfaces for somatosensory feedback: Bringing life to a prosthesis. Current Opinion in Neurology. 2015; 28 (6):574 - 59.

LaFleur K, Cassady K, Doud A, Shades K, Rogin E, He B. Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain-computer interface. Journal of Neural Engineering. 2013; 10 (4):046003 - 60.

Perez-Marcos D, Slater M, Sanchez-Vives MV. Inducing a virtual hand ownership illusion through a brain-computer interface. Neuroreport. 2009; 20 (6):589-594 - 61.

Cohen O, Druon S, Lengagne S, Mendelsohn A, Malach R, Kheddar A, Friedman D. fMRI-based robotic embodiment: Controlling a humanoid robot by thought using real-time fMRI. Presence Teleoperators and Virtual Environments. 2014; 23 (3):229-241 - 62.

Alimardani M, Nishio S, Ishiguro H. Humanlike robot hands controlled by brain activity arouse illusion of ownership in operators. Scientific Reports. 2013; 3 :2396 - 63.

Braun N, Emkes R, Thorne JD, Debener S. Embodied neurofeedback with an anthropomorphic robotic hand. Scientific Reports. 2016; 6 :37696 - 64.

Leeb R, Keinrath C, Friedman D, Guger C, Scherer R, Neuper C, Pfurtscheller G, et al. Walking by thinking: The brainwaves are crucial, not the muscles! Presence Teleoperators and Virtual Environments. 2006; 15 (5):500-514 - 65.

Penaloza CI, Alimardani M, Nishio S. Android feedback-based training modulates sensorimotor rhythms during motor imagery. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2018; 26 (3):666-674 - 66.

Heyes C, Bird G, Johnson H, Haggard P. Experience modulates automatic imitation. Cognitive Brain Research. 2005; 22 (2):233-240 - 67.

Thelen E. Motor development: A new synthesis. American Psychologist. 1995; 50 (2):79 - 68.

Evans N, Blanke O. Shared electrophysiology mechanisms of body ownership and motor imagery. NeuroImage. 2013; 64 :216-228 - 69.

Botvinick M, Cohen J. Rubber hands ‘feel’ touch that eyes see. Nature. 1998; 391 (6669):756 - 70.

Alimardani M, Nishio S, Ishiguro H. Removal of proprioception by BCI raises a stronger body ownership illusion in control of a humanlike robot. Scientific Reports. 2016; 6 :33514