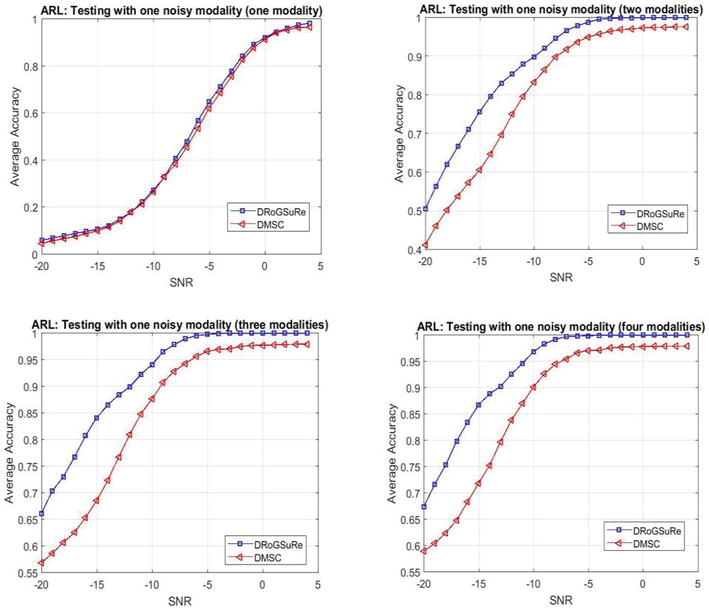

Performance comparison for ARL dataset.

Abstract

In this work, we seek to exploit the deep structure of multi-modal data to robustly exploit the group subspace distribution of the information using the Convolutional Neural Networks (CNNs) formalism. Upon unfolding the set of subspaces constituting each data modality, and learning their corresponding encoders, an optimized integration of the generated inherent information is carried out to yield a characterization of various classes. Referred to as deep Multimodal Robust Group Subspace Clustering (DRoGSuRe), this approach is compared against the independently developed state-of-the-art approach named Deep Multimodal Subspace Clustering (DMSC). Experiments on different multimodal datasets show that our approach is competitive and more robust in the presence of noise.

Keywords

- sparse learning

- computer vision

- unsupervised classification

- subspace clustering

- multi-modal sensor data

1. Introduction

Unsupervised learning is a very challenging topic in Machine Learning (ML) and involves the discovery of hidden patterns in data for inference with no prior given labels. Reliable clustering techniques will save time and effort required for classifying/labeling large datasets that might have thousands of observations. Multi-modal data, increasingly in need for complex application problems, have become more accessible with recent advances in sensor technology, and of pervasive use in practice. The plurality of sensing modalities in our applications of interest, provides diverse and complementary information, necessary to capture the salient characteristics of data and secure their unique signature. A principled combination of the information contained in the different sensors and at different scales is henceforth pursued to enhance understanding of the distinct structure of the various classes of data. The objective of this work is to develop a principled multi-modal framework for object clustering in an unsupervised learning scenario. We extract key class-distinct features-signatures from each data modality using a CNNs encoder, and we subsequently non-linearly combine those features to generate a discriminative characteristic feature. In so doing, we work on the hypothesis that each data modality is approximated by a Union of low dimensional Subspaces which highlights underlying hidden features. The UoS structure is unveiled by pursuing sparse self-representation of the given data modality. The subsequent aggregation of the multi-modal subspace structures yields a jointly unified characteristic subspace for each class.

1.1 Related work

Subspace clustering has been introduced as an efficient way for unfolding union of low-dimensional subspaces underlying high dimensional data. Subspace clustering has been extensively studied in computer vision due to the vast availability of visual data as in [1, 2, 3, 4]. This paradigm has broadly been adopted in many applications such as image segmentation [5], image compression [6], and object clustering [7].

Uncovering the principles and laying out the fundamentals for multi-modal data has become an important topic in research in light of many applications in diverse fields including image fusion [8], target recognition [9, 10, 11, 12], speaker recognition [13], and handwriting analysis [14]. Convolutional neural networks have been widely used on multi-modal data as in [15, 16, 17, 18, 19]. A multi-modal subspace clustering-inspired approach was also proposed in [20]. The emphasis of our formulation results in a different optimization problem, as the multi-modal sensing seeks to not only account for the private information which provides the complementarity of the sensors, but also the common and hidden information. This yields, as an end result, a different network structure than that of [20] with a different application space inspiration. In addition, the robustness of fusing multi-modal sensor data each with its distinct intrinsic structure, is addressed along with a potential scaling for viability. A thorough comparison of our results to the multimodal fusion network in [21] is carried out, with a demonstration of resilient fusion under a variety of limiting scenarios including limited sensing modalities (sensor failures). In [22], the authors proposed a deep multi-view subspace clustering approach that combined global and local structures to help achieve a small distance between samples of the same cluster and make samples in different clusters of different views farther. To that end, they used a discriminative constraint between different views. The discriminative constraint is based on the Hadamard product between the features extracted by the convolutional auto-encoder for the different views. In contrast, our approach is based on the minimizing the group norm, which we proved with a derivation in earlier work [23] and entails a smaller angle between the different subspaces across all modalities, thus promoting the goal of obtaining a common latent space. Moreover, minimizing the group norm also provides as well as group sparse solution along data modalities. Sun et al. [24] proposed a deep trainable multi-view subspace clustering method, named self-supervised deep multi-view subspace clustering (S2DMVSC) that learns the common latent subspace using two losses: spectral clustering loss and classification loss in order to denoise the imperfect correlations among data points.

In this paper, we prove that our formulation, which is based on the group norm of the self-representation matrices and the commutation loss between them, provides a natural way to fuse multi-modal data by employing the self-representation matrix as an embedding for each data modality, making our approach robust under different types of potential limitations. It is good to note that our proposed approach secures the individual sensor data-points relations resulting in more flexibility for each data modality.

1.2 Contributions

Building on the work of Deep Subspace Clustering (DSC) [25], we propose a new and principled multi-modal fusion approach which accounts for a sensor’ capacity to house private and unique information about some observed data as well as that information which is likely also captured and hence common to other sensors. This is accounted for in our robust fusion formulation for multi-modal sensor data. Unveiling the complex UoS of multi-modal data also requires us to account for scaling in our proposed formulation and solution, which in turn invokes the learning of multiple/deep scale Convolutional Neural Networks. Our proposed Multi-modal fusion approach, by virtue of each sensor information structure (i.e., private plus shared) seeks to enhance and robustify the subspace approximation of shared information for each of the sensors, thus yielding a parallel bank of UoS for each of the sensors. The robust Deep structure effectively achieves scaling while securing structured representation for unsupervised inference. We compare our approach to a well-known deep multimodal network [21] which was also based on [25].

In our proposed approach, we thus define the latent space in a way that safeguards the individual sensor private information which hence dedicates more degrees of freedom to each of the sensors. In contrast to the approach in [21]. In our evaluation, we use two recently released data sets each of which we partition into learning and validation subsets. The learned UoS structure for each of the data sets is then utilized to classify new observed data points, which illustrates the generalization power of the proposed approach. Different scenarios with corresponding additive noise to either the training set or the testing set, or both, were used to thoroughly investigate the robustness, and resilience of the clustering approach performance. Experimental results confirm a significant improvement for our Deep Robust Group Subspace Recovery network (DRoGSuRe) under numerous limiting scenarios and demonstrate robustness under these conditions.

The balance of the paper is organized as follows, in Section 2, we provide the problem formulation, background along with the derivation for our proposed approach, Deep Robust Group Subspace Recovery (DRoGSuRe). In Section 3, we describe the attributes of the proposed approach and contrast it to Deep Multimodal Subspace Clustering algorithm (DMSC). In Sections 4 and 5, we present a substantiative validation along with experimental results of our approach, while Section 6 provides concluding remarks.

2. Deep robust group subspace clustering

2.1 Problem formulation

We assume having a set of data observations, each represented as a

We will exploit the self-expressive property presented in [1, 26], which entails that each data observation

If we stack all the data points

The important information about the relations among data samples is then recorded in the self-representation coefficient matrix

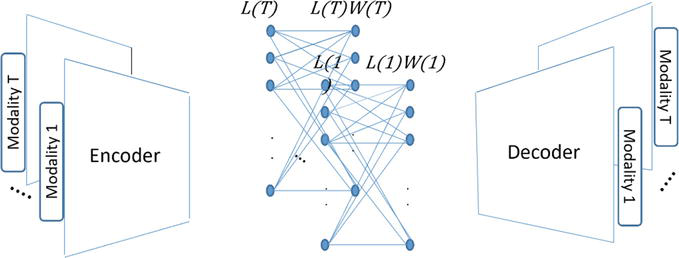

Figure 1.

Deep robust group subspace clustering diagram.

Our algorithm consists of three main stages; the first stage is the encoder which encodes the input modalities into a latent space. The encoder consists of

We also define [

The loss function is then rewritten as,

where

Assume

Similar to [4], we utilize linearized ADMM [31] to approximate the minimum of Eq. (7) since the algorithmic solution is complicated and yields a non-convex optimization functional. It has been shown that linearized ADMM is very effective for

where

where

After computing the gradient of the loss function, the weights of each multi-layer network, that corresponds to one modality, are updated while other modalities’ networks are fixed. In other words, after constructing the data during the forward pass, the loss function determines the updates that back-propagates through each layer. The encoder of the first modality is updated, afterwards, the self-expressive layer of that modality gets updated and finally the decoder. Since the weights corresponding to each modality are dependent on other modalities, we update each part of the network corresponding to each modality with the assumption that all other networks’ components corresponding to other modalities are fixed. The resulting sparse coefficient matrices

Integrating the sparse coefficient matrices helps reinforcing the relation between data points that exist in all data modalities, thus establishing a cross-sensor consistency. Furthermore, adding the sparse coefficient matrices reduces the noise variance introduced by the outliers. A similar approach was introduced in [34] for Social Networks community detection, where an aggregation of multi-layer adjacency matrices was proved to provide a better Signal to Noise ratio, and ultimately better performance. To proceed with distinguishing the various classes in unsupervised manner, we construct the affinity matrix as follows,

where

2.2 Theoretical discussion

In order to justify the multiple banks of self-expressive layers, we assume that each modality

The shared information can be represented as follows,

where

The proof of the proposition can be found in Appendix A. We basically show that by perturbing one or more data modalities, our proposed approach introduces less error to the overall affinity matrix as compared to DMSC. Hence, preserving the performance and yielding a graceful degradation of the clustering accuracy as an increasing number of modalities get corrupted by noise.

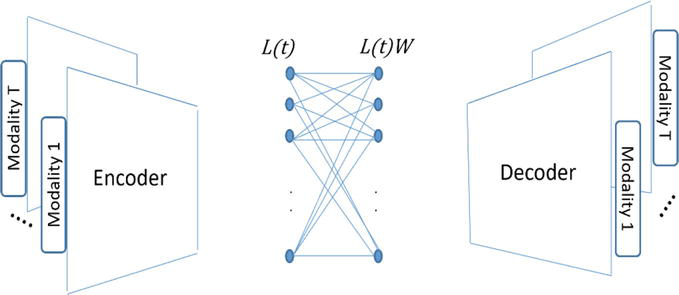

3. Affinity fusion deep multimodal subspace clustering

For completeness, we provide a brief overview of the Deep Multimodal Subspace Clustering algorithm which was proposed in [4]. As noted earlier for DRoGSuRe and similarly for Affinity Fusion Deep Multimodal Subspace clustering (AFDMSC), the network is composed of three main parts: a multimodal encoder, a self-expressive layer, and a multimodal decoder. The output of the encoder contributes to a common latent space for all modalities. The self-expressiveness property applied through a fully connected layer between the encoder and the decoder results in one common set of weights for all the data sensing modalities. This marks a divergence in defining the latent space with DRoGSuRe. Our proposed approach, as a result, safeguards the private information

where

Figure 2.

Deep multimodal subspace clustering diagram.

4. Experimental results

4.1 Dataset description

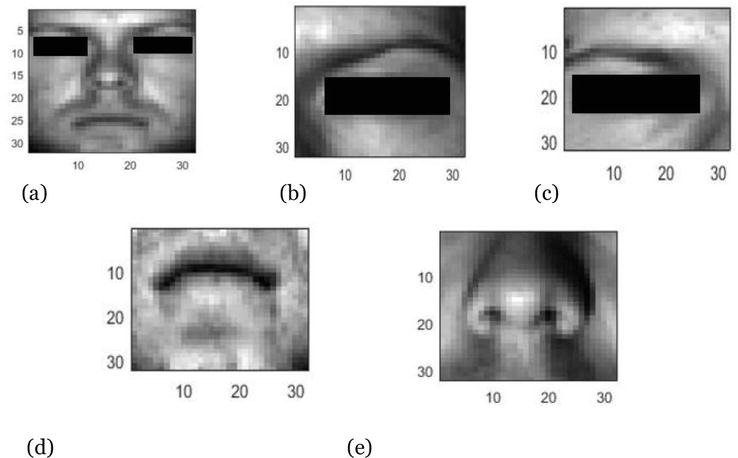

We will evaluate our approach on two different datasets. The first dataset we will use is the Extended Yale-B dataset [39]. The same dataset has been used extensively in subspace clustering as in [1, 40]. The dataset is composed of 64 frontal images of 38 individuals under different illumination conditions. In this work, we will use the augmented data used in [4], where facial components such as left eye, right eye, nose and mouth have been cropped to represent four additional modalities. Images corresponding to each modality have been cropped to a size of 32

Figure 3.

Sample images from the augmented extended Yale-B Dataset. (a) Face. (b) Left eye. (c) Right eye. (d) Mouth. (e) Nose.

Figure 4.

Sample images from the ARL polarimetric dataset. (a) Visible. (b) DoLP. (c) S0. (d) S1. (e) S2.

4.2 Network structure

In the following, we will elaborate on how we construct the neural network for each dataset. Similar to [4], we implemented DRoGSuRe with Tensorflow and used the adaptive momentum based gradient descent method (ADAM) [30] to minimize the loss function in Eq. (5) with a learning rate of

In case of ARL dataset, we have five data modalities and will therefore have 5 different encoders, self-expressive layers and decoders. Each encoder is composed of three neural layers. The first layer consists of 5 convolutional filters of kernel size 3. The second layer has 7 filters of kernel size 1. The last layer has 15 filters with kernel size equals 1.

For EYB dataset, we also have five data modalities, therefore, we have 5 different encoders, self-expressive layers and decoders. Each encoder is composed of three neural layers. The first layer consists of 10 convolutional filters of kernel size 5. The second layer has 20 filters of kernel size 3. The last layer has 30 filters of kernel size 3.

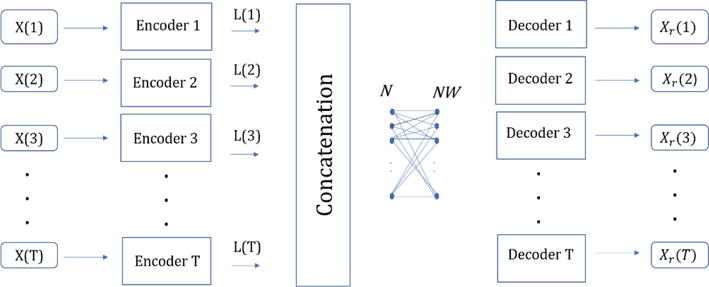

4.3 Noiseless results

In the following, we compare the performance of our approach versus the DMSC approach when learning the union of subspaces structure of noise-free data. First, we divide each dataset into training and validation sets to be able to classify a newly observed dataset, using the structure learned through the current unlabeled data. The ARL expression dataset used for training consists of 2160 images per modality. The validation baseline images include 720 images total per modality. For the EYB, we randomly selected 1520 images per modality for training and 904 images for validation. The sparse solution

After learning the structure of the data clusters, we validate our results on the validation set. We extract the principal components (eigen vectors of the covariance matrix) of each cluster in the original (training) dataset, to act as a representative subspace of its corresponding class. We subsequently project each new test point onto the subspace corresponding to each cluster, spanned by its principal components. The

| Learning | Validation | |

|---|---|---|

| DMSC | 97.59% | 98.33% |

| DRoGSuRe | 100% | 100% |

Table 1.

| Learning | Validation | |

|---|---|---|

| DMSC | 98.82% | 98.89% |

| DRoGSuRe | 98.42% | 98.76% |

Table 2.

Performance comparison for EYB dataset.

4.4 Noise training with single and multiple modalities

In the following, we test the robustness of our approach in the case of noisy learning. We distort one modality at a time by shuffling the pixels of all images in that modality during the training phase. By doing so, we are perturbing the structure of the sparse coefficient matrix associated with that modality, thus impacting the overall W matrix for both DRoGSuRe and DMSC. Testing with clean data, i.e., no distortion, demonstrates the impact of perturbing the training and hence performing an inadequate training, e.g., insufficient data or non-convergence. This can also be considered as augmenting the training data with new information or a new view for one modality which might not necessarily contained in the testing or the validation data. Moreover, we repeat the same experiment with the distortion of two modalities before learning the sparse coefficient matrices for both DMSC and DRoGSuRe. The results for the ARL dataset are depicted in Tables 3 and 4, while results for the EYB dataset are shown in Tables 5 and 6. For ARL dataset, we refer to Visible, S0, S1, S2 and DoLP as Mod 0, 1, 2, 3 and 4 respectively. For EYB Dataset, we refer to Face, left eye, nose, mouth and right eye as mod 0, 1, 2, 3, and 4. We refer to each modality as Mod, where L denotes learning and V denotes validation results. From the results, it is clear that DRoGSuRe is showing a significant improvement in the clustering accuracy as compared to DMSC for both learning and validation set. The reason for that, is again, due to the fact that perturbing one or two modalities would have less impact on the overall performance for DRoGSuRe in comparison to DMSC.

| DMSC L | DMSC V | DRoGSuRe L | DRoGSuRE V | |

|---|---|---|---|---|

| Mod 0 | 87.17% | 86.67% | 95.37% | 95% |

| Mod 1 | 91.67% | 90% | 98.29% | 98.33% |

| Mod 2 | 92.77% | 92.78% | 99.17% | 99.44% |

| Mod 3 | 90.55% | 90.57% | 99.31% | 99.44% |

| Mod 4 | 92.78% | 91.11% | 96.44% | 96.67% |

Table 3.

ARL dataset: Distorting one modality.

| DMSC L | DMSC V | DRoGSuRe L | DRoGSuRE V | |

|---|---|---|---|---|

| Mod 0 & 1 | 82.22% | 82.78% | 92.27% | 94.58% |

| Mod 1 & 2 | 91.11% | 91.11% | 97.22% | 97.36% |

| Mod 0 & 3 | 85.51% | 82.56% | 93.01% | 95.42% |

| Mod 1 & 4 | 91.67% | 89.44% | 97.22% | 97.36% |

| Mod 2 & 3 | 90% | 89.72% | 97.69% | 97.78% |

Table 4.

ARL dataset: Distorting two modalities.

| DMSC L | DMSC V | DRoGSuRe L | DRoGSuRE V | |

|---|---|---|---|---|

| Mod 0 | 87.96% | 88.5% | 93.29% | 94.69% |

| Mod 1 | 91.84% | 91.15% | 95.79% | 97.46% |

| Mod 2 | 89.01% | 88.72% | 98.03% | 97.57% |

| Mod 3 | 92.69% | 91.81% | 95.59% | 96.68% |

| Mod 4 | 91.45% | 91.59% | 97.17% | 97.35% |

Table 5.

EYB dataset: Distorting one modality.

| DMSC L | DMSC V | DRoGSuRe L | DRoGSuRE V | |

|---|---|---|---|---|

| Mod 0 & 2 | 86.64% | 85.18% | 96.84% | 96.13% |

| Mod 0 & 4 | 87.83% | 89.16% | 94.54% | 95.8% |

| Mod 1 & 4 | 86.38% | 86.06% | 94.21% | 95.8% |

| Mod 2 & 3 | 88.22% | 84.96% | 91.58% | 93.92% |

| Mod 3 & 4 | 88.03% | 86.28% | 94.08% | 95.35% |

Table 6.

EYB dataset: Distorting two modalities.

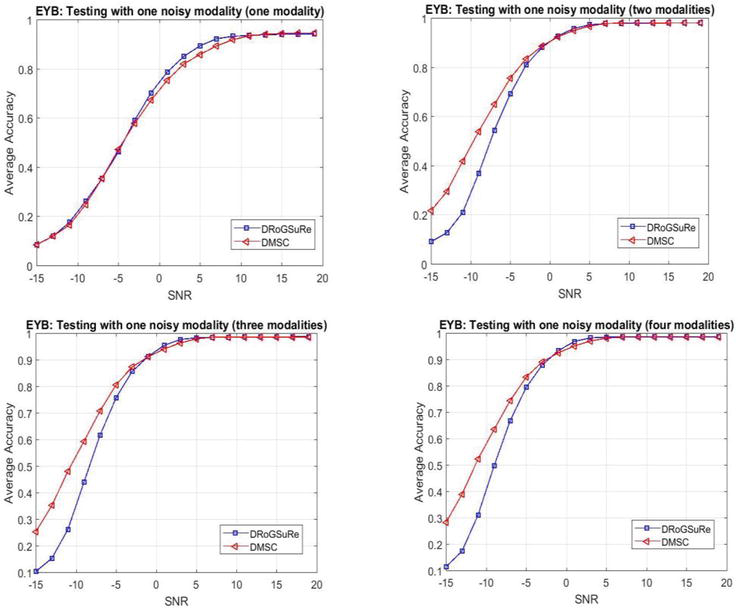

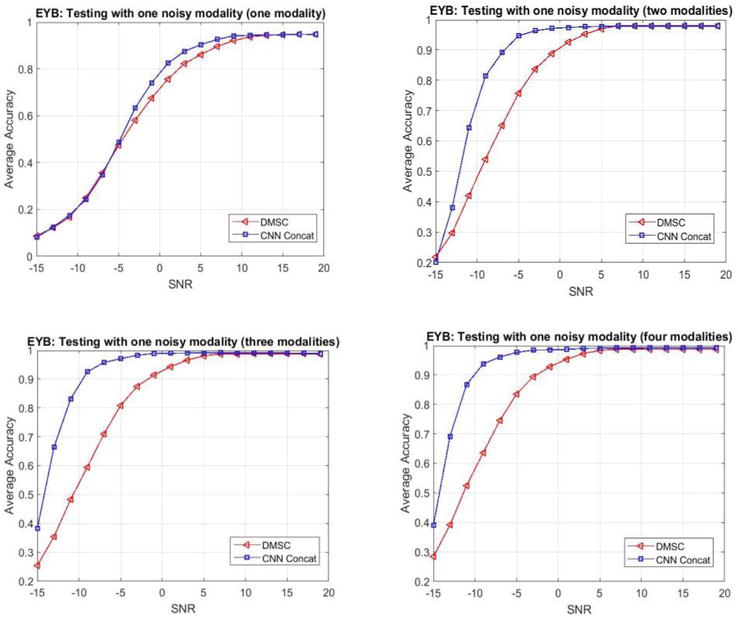

4.5 Testing with limited noisy testing data

In the following, we study the effect of using noiseless data for training while validating with noisy and missing data. We add Gaussian noise to one data modality in the validation set and vary the SNR by varying the noise variance. We subsequently assume that we only have one modality available at testing. Then, we keep increasing the number of available noiseless data modalities beside the noisy modality. We average the results considering all different combinations of data modalities for ARL and EYB datasets. The results are depicted in Figures 5 and 6 respectively. For the ARL dataset, we note the increasing gap between DMSC and DRoGSuRe as we augment the sensing capacity with noise-free modalities. On the other hand, for the EYB dataset and at lower SNR, the performance of DRoGSuRe is slightly worse than DMSC which might be explained by the results in Table 2; as the training accuracy for DMSC is slightly better than DRoGSuRe in the case of clean training. However, at higher SNR, the performance of the two approaches is very close.

Figure 5.

ARL noiseless training and validating on limited noisy data.

Figure 6.

EYB noiseless training and validating on limited noisy data.

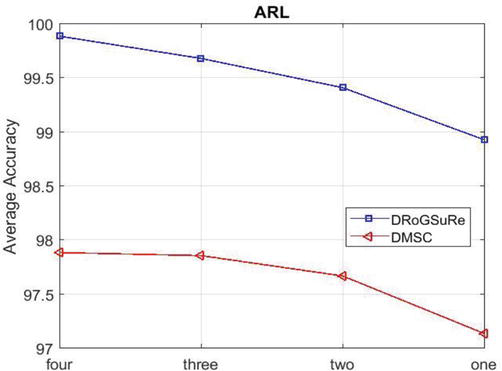

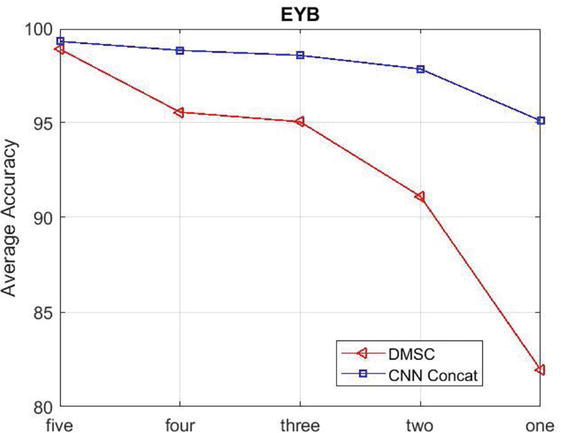

4.6 Missing modalities during testing

In the following, we evaluate the performance of DRoGSuRe and DMSC in case of missing data modalities during testing. It is not uncommon to have one or more sensors that might be silent during testing, thus justifying this experiment for further assessment. We try different combinations of available modalities during testing, and we average the clustering accuracy for each trial. Results are depicted in Figures 7 and 8 for ARL and EYB data respectively. Again, we notice a significant improvement for DRoGSuRe over DMSC for ARL Dataset. For EYB dataset, there is a slight improvement for DRoGSuRe over DMSC. The reason behind the slight improvement is because our approach introduces less error to the overall affinity matrix as compared to DMSC. Hence, preserving the performance and yielding a graceful degradation of the accuracy although DMSC was the state of the art for the EYB dataset.

Figure 7.

Missing modalities during testing for ARL dataset.

Figure 8.

Missing modalities during testing for EYB dataset.

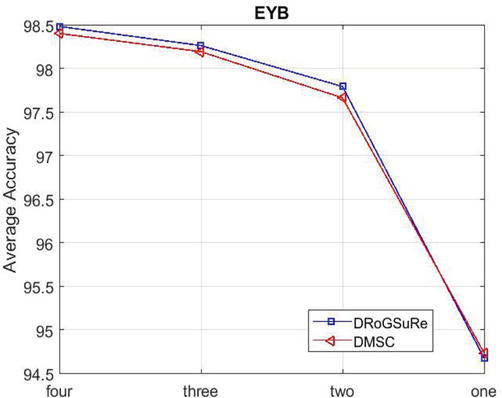

5. Feature concatenation

Here we propose a rationale along with an alternative solution for enhancing the performance for EYB multi-modal data. Due to the specific structure of the EYB multi-modal data, the concatenation of the features corresponding to each modality is a reasonable alternative. By doing so, we are adjoining together the features representing each part of the face. Since the four modalities correspond to non-overlapping partitions of the face, the feature set corresponding to each partition will solely provide complementing information. A similar idea is proposed in [4] and is referred to as Late concatenation, where the multi-modal data is integrated in the last stage of the encoder. Their resulting decoder structure remains the same for either affinity fusion or late concatenation. This entails de-concatenating the multi-modal data prior to decoding it. Our proposed approach on the other hand, results in a self-expressive layer being driven by the concatenated features from the

Figure 9.

CNN Concatenation Network.

where

We compared the performance of our proposed approach against the late concatenation approach in [4] and the results are depicted in Table 7 for the EYB dataset.

| Learning | Validation | |

|---|---|---|

| DMSC Late Concatenation | 95.66% | 94.7% |

| CNN Concatenation Network | 99.28% | 99.3% |

Table 7.

Concatenation performance for EYB dataset.

From the previous table, we can conclude that concatenating the features from the encoder and feeding the concatenated information to each decoder branch achieves a better performance for this type of multi-modal data structure. The reason behind this enhancement is the combination of efficient extraction of the basic features from the whole face and finer features from each part of the face. Promoting more efficiency as noted, this concatenation may also be intuitively viewed as adequate mosaicking, in which different patterns complement each other. In the following, we will show how our proposed approach performs in two cases: missing and noisy test data. The results of the new proposed approach, which we refer to as CNNs concatenation network, is compared to the state-of-the-art DMSC network [4]. We start by training the auto-encoder network using 75% of the data and then we test on the rest of the data. In Figure 10, we show how the performance degrades by decreasing the number of available modalities at testing from five to one. From the results, it is clear how the CNNs concatenation network outperforms the DMSC network. Additionally, we repeated the same experiment we performed in subsection 4.5. We train the network with noiseless data and then add Gaussian noise to one data modality at the testing. Additionally, we vary the number of available modalities at testing from one to four. The results are depicted in Figure 11. From the results, it is clear how the concatenated CNNs is more robust to noise than DMSC.

Figure 10.

Missing modalities during testing for EYB dataset.

Figure 11.

EYB noiseless training and validating on limited noisy data.

In addition, we have utilized the Concatenation network to perform object clustering on the ARL data. We compare the clustering performance of the concatenation network with both DMSC and DRoGSuRe. The results are depicted in Table 8. From the results, we conclude that DRoGSuRe still outperforms the other approaches for the ARL dataset. Although the number of parameters involved in training the DRoGSuRe network is higher than other approaches, since there are multiple self-expressive layers, however, DRoGSuRe is more robust to noise and limited data availability during testing.

| Learning | Validation | |

|---|---|---|

| DMSC | 97.59% | 98.33% |

| DRoGSuRe | 100% | 100% |

| CNN Concat | 99.44% | 99.17 |

Table 8.

Concatenation performance for ARL dataset.

6. Conclusion

In this paper, we proposed a deep multi-modal approach to fuse data through recovering the underlying subspaces of data observations from data corrupted by noise to scale to complex data scenarios. DRoGSuRe provides a natural way to fuse multi-modal data by employing the self-representation matrix as an embedding for each data modality. Experimental results show a significant improvement for DRoGSuRe over DMSC under different types of potential limitations and provides robustness with limited sensing modalities. We also proposed the concatenated CNNs model, which can work better for different multi-modal data structures.

Acknowledgments

This work was in part supported by DOE-National Nuclear Security Administration through CNEC-NCSU under Award DE-NA0002576. The first author was also in part supported by DTRA.

To theoretically compare our proposed variational scaling fusion approach DRoGSuRe to DMSC, we proceed by way of a first order perturbation analysis on the parameter set

Adopting the original formulation for the first persistently differential scaling approach, namely that

A first order perturbation on the data may be due to noise or to a degradation of a given sensor, and results in a perturbation of the UoS parameters,

For the first method, each modality will have an associated subspace cluster parameter set

Where the unperturbed overall sparse coefficient matrix is written as follows,

Proof. We first write the affinity matrix associated with DRoGSuRE as,

where the superscript

Where

Letting

Given the

Since DMSC assumes having one sparse coefficient matrix

The affinity matrix associated with DMSC can be written as follows,

Similarly, the unperturbed affinity matrix will be as follows,

From Eqs. (A10) and (A11), the magnitude of the difference can be written as follows,

Letting

In light of the above two bounds, and the results of [42], where it is shown that the spectral clustering dependent on the respective projection operators

where

where

References

- 1.

Elhamifar E, Vidal R. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2013; 35 :2765-2781 - 2.

Favaro P, Vidal R, Ravichandran A. A closed form solution to robust subspace estimation and clustering. In: CVPR 2011. Colorado springs, Colorado, USA: IEEE; 2011. pp. 1801-1807 - 3.

Li CG, Vidal R. Structured sparse subspace clustering: A unified optimization framework. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Boston, MA, USA: IEEE; 2015. pp. 277-286 - 4.

Bian X, Panahi A, Krim H. Bi-sparsity pursuit: A paradigm for robust subspace recovery. Signal Processing. 2018; 152 :148-159 - 5.

Yang AY, Rao SR, Ma Y. Robust statistical estimation and segmentation of multiple subspaces. In: 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06). New York, NY, USA: IEEE; 2006. p. 99 - 6.

Hong W, Wright J, Huang K, Ma Y. Multiscale hybrid linear models for lossy image representation. IEEE Transactions on Image Processing. 2006; 15 :3655-3671 - 7.

Ho J, Yang MH, Lim J, Lee KC, Kriegman D. Clustering appearances of objects under varying illumination conditions. In: 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings. Madison, Wisconsin, USA: IEEE; 2003. p. I - 8.

Hellwich O, Wiedemann C. Object extraction from high-resolution multisensor image data. In: Third International Conference Fusion of Earth Data. France: Sophia Antipolis; 2000 - 9.

Korona Z, Kokar MM. Model theory based fusion framework with application to multisensor target recognition. In: 1996 IEEE/SICE/RSJ International Conference on Multisensor Fusion and Integration for Intelligent Systems (Cat. No. 96TH8242). Tokyo, Japan: IEEE; 1996. pp. 9-16 - 10.

Ghanem S, Panahi A, Krim H, Kerekes RA, Mattingly J. Information subspace-based fusion for vehicle classification. In: 2018 26th European Signal Processing Conference (EUSIPCO). Rome, Italy: IEEE; 2018. pp. 1612-1616 - 11.

Ghanem S, Roheda S, Krim H. Latent code-based fusion: A volterra neural network approach. 2021. arXiv preprint arXiv:2104.04829 - 12.

Wang H, Skau E, Krim H, Cervone G. Fusing heterogeneous data: A case for remote sensing and social media. IEEE Transactions on Geoscience and Remote Sensing. 2018; 56 :6956-6968 - 13.

Soong FK, Rosenberg AE. On the use of instantaneous and transitional spectral information in speaker recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing. 1988; 36 :871-879 - 14.

Xu L, Krzyzak A, Suen CY. Methods of combining multiple classifiers and their applications to handwriting recognition. IEEE Transactions on Systems, Man, and Cybernetics. 1992; 22 :418-435 - 15.

Ngiam J, Khosla A, Kim M, Nam J, Lee H, Ng A. Multimodal deep learning. In: International Conference on Machine Learning (ICML). Bellevue, Washington, USA: International Machine Learning Society (IMLS). The conference. 2011. pp. 689-696 - 16.

Ramachandram D, Taylor GW. Deep multimodal learning: A survey on recent advances and trends. IEEE Signal Processing Magazine. 2017; 34 :96-108 - 17.

Valada A, Oliveira GL, Brox T, Burgard W. Deep multispectral semantic scene understanding of forested environments using multimodal fusion. In: International Symposium on Experimental Robotics. Tokyo, Japan: Springer; 2016. pp. 465-477 - 18.

Roheda S, Riggan BS, Krim H, Dai L. Cross-modality distillation: A case for conditional generative adversarial networks. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Calgary, Alberta, Canada: IEEE; 2018b. pp. 2926-2930 - 19.

Roheda S, Krim H, Luo ZQ, Wu T. Decision level fusion: An event driven approach. In: 2018 26th European Signal Processing Conference (EUSIPCO). Rome, Italy: IEEE; 2018a. pp. 2598-2602 - 20.

Zhu P, Hui B, Zhang C, Du D, Wen L, Hu Q. Multi-view deep subspace clustering networks. 2019. arXiv preprint arXiv:1908.01978. - 21.

Abavisani M, Patel VM. Deep multimodal subspace clustering networks. IEEE Journal of Selected Topics in Signal Processing. 2018; 12 :1601-1614 - 22.

Wang Q, Cheng J, Gao Q, Zhao G, Jiao L. Deep multi-view subspace clustering with unified and discriminative learning. IEEE Transactions on Multimedia. 2020; 23 :3483-3493 - 23.

Ghanem S, Panahi A, Krim H, Kerekes RA. Robust group subspace recovery: A new approach for multi-modality data fusion. IEEE Sensors Journal. 2020; 20 :12307-12316 - 24.

Sun X, Cheng M, Min C, Jing L. Self-supervised deep multi-view subspace clustering. In: Asian Conference on Machine Learning, Nagoya, Japan: PMLR. 2019. pp. 1001-1016 - 25.

Ji P, Zhang T, Li H, Salzmann M, Reid I. Deep subspace clustering networks. Advances in Neural Information Processing Systems. 2017; 30 :24-33 - 26.

Bian X, Krim H. Bi-sparsity pursuit for robust subspace recovery. In: 2015 IEEE International Conference on Image Processing (ICIP). Québec city, Québec, Canada: IEEE; 2015. pp. 3535-3539 - 27.

Roheda S, Krim H, Riggan BS. Robust multi-modal sensor fusion: An adversarial approach. IEEE Sensors Journal. 2020b; 21 :1885-1896 - 28.

Roheda S, Krim H, Riggan BS. Commuting conditional GANS for multi-modal fusion. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Barcelona, Spain: IEEE; 2020a. pp. 3197-3201 - 29.

Roheda S, Krim H, Luo ZQ, Wu T. Event driven fusion. 2019. arXiv preprint arXiv:1904.11520 - 30.

Kingma DP, Ba J. Adam: A method for stochastic optimization. 2014. arXiv preprint arXiv:1412.6980 - 31.

Lin Z, Liu R, Su Z. Linearized alternating direction method with adaptive penalty for low-rank representation. In: Advances in Neural Information Processing Systems. Granada, Spain: NIPS; 2011. pp. 612-620 - 32.

Rockafellar RT. Augmented Lagrange multiplier functions and duality in nonconvex programming. SIAM Journal on Control. 1974; 12 :268-285 - 33.

Luenberger DG, Ye Y, et al. Linear and Nonlinear Programming. Vol. 2. Springer; 1984 - 34.

Taylor D, Shai S, Stanley N, Mucha PJ. Enhanced detectability of community structure in multilayer networks through layer aggregation. Physical Review Letters. 2016; 116 :228301 - 35.

Ng AY, Jordan MI, Weiss Y. On spectral clustering: Analysis and an algorithm. In: Advances in Neural Information Processing Systems. Vancouver, British Columbia, Canada: NIPS; 2002. pp. 849-856 - 36.

Gabriel HH, Spiliopoulou M, Nanopoulos A. Eigenvector-based clustering using aggregated similarity matrices. In: Proceedings of the 2010 ACM Symposium on Applied Computing. Switzerland: Sierre; 2010. pp. 1083-1087 - 37.

Dong X, Frossard P, Vandergheynst P, Nefedov N. Clustering on multi-layer graphs via subspace analysis on Grassmann manifolds. IEEE Transactions on Signal Processing. 2013; 62 :905-918 - 38.

Chen PY, Hero AO. Multilayer spectral graph clustering via convex layer aggregation: Theory and algorithms. IEEE Transactions on Signal and Information Processing over Networks. 2017; 3 :553-567 - 39.

Lee KC, Ho J, Kriegman DJ. Acquiring linear subspaces for face recognition under variable lighting. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2005; 27 :684-698 - 40.

Liu G, Lin Z, Yan S, Sun J, Yu Y, Ma Y. Robust recovery of subspace structures by low-rank representation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2012; 35 :171-184 - 41.

Hu S, Short NJ, Riggan BS, Gordon C, Gurton KP, Thielke M, et al. A polarimetric thermal database for face recognition research. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Las Vegas, NV, USA: IEEE Conference on Computer Vision and Pattern Recognition, CVPR; 2016. pp. 187-194 - 42.

Hunter B, Strohmer T. Performance analysis of spectral clustering on compressed, incomplete and inaccurate measurements. 2010. arXiv preprint arXiv:1011.0997